Published: December 7, 2025

For many university students, the AI note-taker has become as essential as the laptop itself.

The premise is simple. You press a button, and a bot joins your lecture. It records the audio, transcribes the discussion, and generates a concise summary of key concepts.

For a student juggling five courses and a part-time job, this technology feels like a productivity lifeline.

But heading into the 2026 academic year, this digital convenience is colliding with institutional policy.

University IT departments are increasingly moving to restrict unauthorized recording tools.

They cite a complex web of data privacy concerns and federal guidelines that were written decades before artificial intelligence existed.

The goal is not necessarily to remove AI from the learning environment. Instead, institutions are attempting to shift students away from “open” consumer apps toward secure, university-managed systems.

The Mechanics of “Shadow AI”

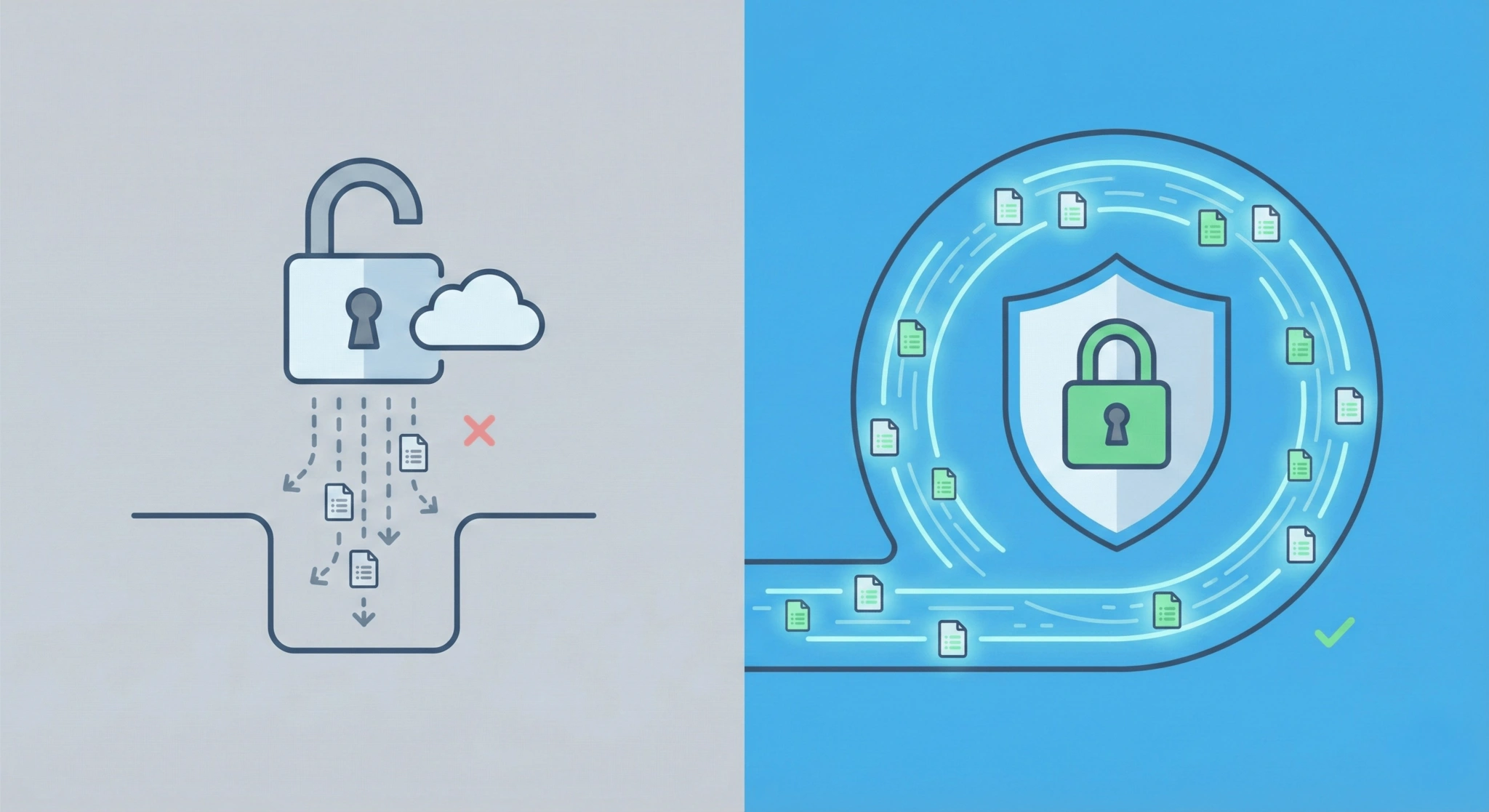

The primary driver of these new limitations is a phenomenon IT directors refer to as “Shadow AI.”

This occurs when students or faculty use unvetted software to record or process institutional data without official approval, a trend similar to what schools experienced during broader edtech upgrades in classroom apps that reduce teacher workload.

A recent industry survey suggests that many education employees are aware of unauthorized AI use, while relatively few institutions have clear policies to manage it.

The risk lies in the architecture of free apps.

When a student logs into a free transcription service, they often grant that service broad permissions.

The audio recorded, which includes the voices of professors and other students, is frequently uploaded to a third-party cloud server.

Once that data leaves the university’s secure network, the school loses visibility into how it is stored.

For administrators, this represents an unmonitored data leak that bypasses the university’s security protocols.

The Voice Data Dilemma

The concerns regarding these recordings go beyond simple copyright. They involve student privacy.

Under the Family Educational Rights and Privacy Act (FERPA), schools are federally mandated to protect student education records.

When an AI app captures a seminar discussion, it records more than just the lecture content. It captures the voices of every student in the room.

If a student asks a sensitive question about a disability or a personal struggle, that disclosure becomes part of the data set.

If that sensitive audio is stored on a server the university does not control, it could raise compliance questions for the institution.

Several institutions have issued guidance warning that such “incidental collection” of non-consenting student voices can raise significant privacy concerns.

The Consent Complexity

Beyond federal guidelines, universities must navigate state-level recording laws.

In states with “Two-Party Consent” regulations, recording a conversation typically requires the permission of everyone involved.

When a student launches a recording bot in a seminar without announcing it, they may inadvertently be testing legal boundaries.

While some argue that classroom lectures are public, the trend in 2026 is toward caution.

Many professors are adding specific clauses to their syllabi regarding “undisclosed recording,” treating the unauthorized use of AI bots as a potential violation of academic conduct.

The Shift to “Walled Gardens”

Despite these risks, universities recognize that AI transcription is a powerful learning tool.

The solution emerging in 2026 is not a total prohibition, but a shift toward Enterprise Licensing.

Many schools are purchasing campus-wide licenses for secure platforms that function inside a “walled garden,” a shift consistent with the broader movement toward AI-powered classroom tools in 2026.

These enterprise versions differ significantly from the free apps students download.

- Local Processing: Data is often processed locally on the device or within a private cloud instance.

- No Training: Contracts explicitly forbid the vendor from using university data to train their public AI models.

- Data Ownership: The university retains full control of the transcripts and recordings.

By providing these “official” tools, universities hope to crowd out the use of insecure, shadow apps.

Deep Dive: The “Personal Podcast” Tool

Most AI tools just summarize text. Google NotebookLM is different because it “reads” only the documents you trust.

You can upload your PDF textbooks or lecture notes, and it becomes an instant expert on those specific files. It cites its sources for every answer, reducing the risk of AI hallucinations.

The wildest feature? It can generate a two-host audio podcast discussing your notes. You can listen to your chemistry homework while walking to class.

Read the full breakdown: How to Use Google NotebookLM as a Study Tool →

The Accommodation Nuance

A distinct policy gap remains for students with disabilities.

While general student bodies face tighter restrictions, Disability Services offices continue to authorize recording tools for eligible students.

Many universities provide accessible recording technology to students with auditory or processing impairments.

This creates a necessary operational split in 2026 policy:

- General Use: Unauthorized personal recording apps are often discouraged to protect class privacy.

- Accommodations: Approved students receive access to compliant tools governed by strict non-disclosure agreements.

This framework ensures that accessibility is maintained without compromising the privacy of the broader classroom environment.

What Students Can Expect

The era of unrestricted classroom recording is evolving.

Students entering the 2026 academic year should anticipate clearer boundaries around digital tools.

Network Filters: Campus Wi-Fi networks may begin to block traffic to known non-compliant transcription servers.

Syllabus Clarity: Professors are increasingly distinguishing between “approved” study aids and “unapproved” recording devices in their course rules.

Official Alternatives: Students will likely be pushed toward specific, school-vetted apps that comply with institutional security standards.

The technology is staying in the classroom. But the data is staying on campus.

School Aid Specialists provides independent reporting on education technology trends. We are not affiliated with the U.S. Department of Education. For specific recording policies, consult your institution’s handbook.

Sarah Johnson is an education policy researcher and student-aid specialist who writes clear, practical guides on financial assistance programs, grants, and career opportunities. She focuses on simplifying complex information for parents, students, and families.